Can AI Agents Like CodeRabbitAI Really Speed Up Software Teams? A Skeptic's Review

Striving for development efficiency is a constant pursuit. But can AI-powered code review tools truly accelerate the software development lifecycle, or do they fall short of the hype? As an AI skeptic, I decided to put AI support agents to the test in real-world scenarios. This article covers my experience with CodeRabbitAI to see where it shines and where it stumbles.

Why I'm Wary of AI Code Generation

My background in systems software has instilled a healthy dose of skepticism toward AI code generation. The non-deterministic nature of AI, especially when correctness is critical, gives me pause. Over-reliance on AI could lead to skill atrophy and vulnerability if the AI's capabilities are outstripped. While I see its potential, I am not keen on using AI to generate code on a large scale.

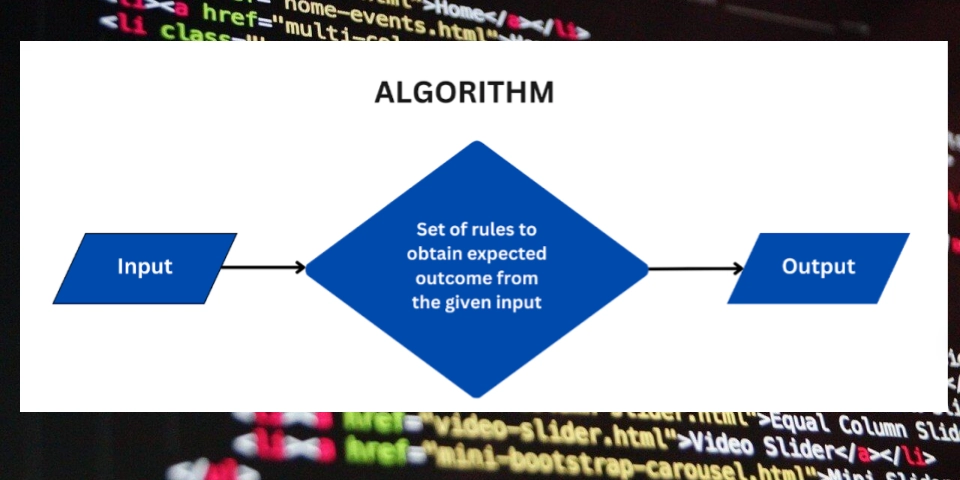

AI Support Agents: A Different Approach to Code Review

Instead of AI code generation, I'm intrigued by AI code review agents focused on code review. Rather than generating code, these agents act as another developer, providing immediate feedback, suggestions, and potential improvements upon opening a pull request.

Here's why I prefer this approach:

- Forces Understanding: You still have to solve the problem and provide a base solution, ensuring that you understand the issue at hand.

- Promotes Critical Thinking: You're more likely to scrutinize AI suggestions and reject those that don't offer genuine improvements.

- Maintains Skill Level: Your problem-solving skills remain intact, while iteration on reviews becomes much faster.

First Impressions with CodeRabbitAI

I chose CodeRabbitAI for its free 14-day trial and GitHub integration to test the impact of quick and continuous AI software developer support. CodeRabbitAI utilizes Claude, an AI model known for its programming prowess. I put it to work on two small personal projects written in Lua, a less common language:

- A NeoVim plugin providing a color scheme.

- A command execution engine.

Initial Test: Minor Fixes, Mixed Results

On the first pull request, implementing fixes in highlight groups for pop-up menus in NeoVim, CodeRabbitAI summarized the PR acceptably, albeit with some inaccuracies. While the walkthrough feature was initially confusing for such a small PR, the linting feedback integration proved surprisingly valuable.

- Pros: Immediate linting feedback directly within pull request comments, a nice quality-of-life improvement.

- Cons: The AI agent essentially just linted the code and generated a nice comment out of the output, verbosity, and failure to recognize that the PR should have been split into two.

Stepping It Up: A More Complex Pull Request

On a more substantial pull request in the command execution repository, CodeRabbitAI surprised me by generating sequence diagrams visualizing the code's control flow. This would be especially useful for onboarding new team members. Even better, it identified a missing shell dependency in a test configuration—a valuable catch!

While conversation worked with the agent, it shared suggestions as code blocks rather than directly applicable suggestions.

However, things weren't perfect when I experimented with the command feature:

- Generating the PR title resulted in a generic and uninformative title.

- Requesting docstrings led to a disastrous pull request that added docstrings to all functions in the affected files, even existing ones, with emojis in the commit titles.

- Later, it failed to create a pull request after creating one minutes prior.

Reality Check: Still Needs Human Oversight

While CodeRabbitAI caught some nitpicks and allowed me to perform simple actions, it missed crucial context based on project history. It failed to notice a duplicate feature implementation and didn't call out sub-optimal design decisions. These agents can still not replace humans.

Analytics: Measuring the Impact

CodeRabbitAI offers dashboards to track usage and identify uncovered errors. These analytics are crucial for gauging the impact of AI in software development.

Final Verdict: A Cautious Recommendation

While these tools are far from perfect, I'd welcome them at work as an addition to, not a replacement of, human reviewers. The verbose walkthroughs can be helpful for understanding large PRs. Plus, the near-instant feedback, even with its limitations, is invaluable.

Presenting tool outputs in natural language within PR comments is a significant benefit. As developers take on more responsibilities, AI agents can potentially assist with understanding and acting upon the outputs of various DevSecOps tools.

In conclusion, explore AI support agents like CodeRabbitAI cautiously, understand their limitations, but don't dismiss their potential to augment your development workflow.