Google's A2A Protocol: The Key to Seamless AI Agent Communication?

Ever wondered how to make your AI agents play nice with others? Google's Agent-to-Agent (A2A) protocol might be the answer. Discover how this groundbreaking standard enables AI agents to collaborate, regardless of their underlying framework, and unlock the future of AI interaction.

What is Google's A2A Protocol and Why Should You Care?

The Agent-to-Agent (A2A) protocol is a set of rules released by Google aiming to standardize communication between AI agents. Think of it as a universal translator, allowing AI agents developed in different frameworks (like LangChain or AutoGen) to understand and work with each other. This opens doors for building complex AI ecosystems where specialized AI agents can collaborate to solve problems more effectively. Major players like LangChain, Infosys, and TCS support the A2A protocol, signaling its growing importance in the AI landscape.

A2A vs. MCP: Clearing Up the Confusion

You might be wondering if A2A competes with Model Context Protocol (MCP). The answer is no! They actually complement each other:

- MCP: Connects LLMs to external data sources like APIs and databases.

- A2A: Standardizes communication between AI agents.

Think of MCP as giving agents access to information and A2A as giving them the ability to share that information. Agents built on A2A can communicate seamlessly, regardless of their underlying frameworks or vendors.

5 Core Principles Defining the A2A Protocol

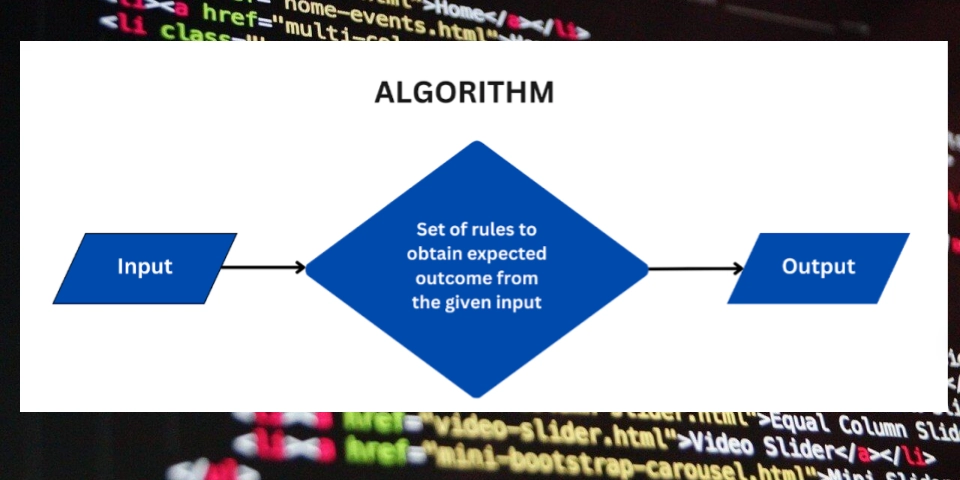

A2A's power lies in its structured approach to agent communication. Here are the five key principles:

- Agent Card: Your Agent's Digital Business Card. This is a standardized "profile" where an agent advertises its capabilities. Other agents can access this “card” to understand what the agent can do, fostering discovery and collaboration.

- Task-Oriented Architecture: "Tasks" as Units of Work. A "Task" represents a request posted to an agent by another agent. The agent processes the request and sends a response, with agents able to function as both clients and servers, completing various task such as; submitted, working, input-required, completed, failed, canceled and unknown.

- Flexible Data Exchange: Speak the Same Language. A2A supports various data types, including plain text, JSON, and files, making it adaptable to different agent interactions.

- Universal Interoperability: Frameworks Don't Matter. This enables the communication across any agent framework whether is LangGraph, AutoGen, CrewAI, and Google ADK. This key interoperability opens the possibilities for intricate AI ecosystems to collaborate.

- Security and Adaptability: Secure and Flexible Communication. A2A supports secure authentication, request-response patterns, streaming, and push notifications ensuring both security and adaptability.

How A2A Works: A Peek Under the Hood

Let's break down the technical details of how A2A enables seamless agent communication:

Key Components

- Agent Card: The agent's public profile, advertising its capabilities.

- A2A Server: The agent application exposing HTTP endpoints.

- A2A Client: Any application or agent consuming A2A server services.

Data Structures: Building Blocks of Communication

- Task: A work unit, including a unique ID, status, artifacts (outputs), history, and metadata.

- Message: A communication turn within a Task, specifying the role ("user" or "agent"), content ("parts"), and metadata.

- Part: The content unit, which can be

TextPart(plain text),FilePart(file content), orDataPart(JSON data). - Artifact: Output generating from task execution, either files and images or structured data results.

Communication Flow: A Step-by-Step Guide

- Discovery: The AI Client agent fetches AgentCard (server agent) from /.well-known/agent.json.

- Initiation: The AI Client generates a unique Task ID and sends an initial message.

- Processing: The server agent handles the request either synchronously or and gives streaming updates.

- Interaction: Multi turns are supported when the server requests for more input.

- Completion: The Task state is completed, failed, or cancelled.

Real-World A2A Applications: Unleashing the Potential

The possibilities with A2A are vast. Here are a few examples:

- Multi-Agent Collaboration: A personal assistant agent collaborates with a research agent for information gathering, or a coding agent requests help from a chart generation agent.

- Agent Marketplaces: Imagine app stores for AI agents, where specialized agents offer services through standardized A2A interfaces.

- Enhanced User Experiences: End users benefit from more capable AI systems that seamlessly leverage specialized knowledge when and as needed.

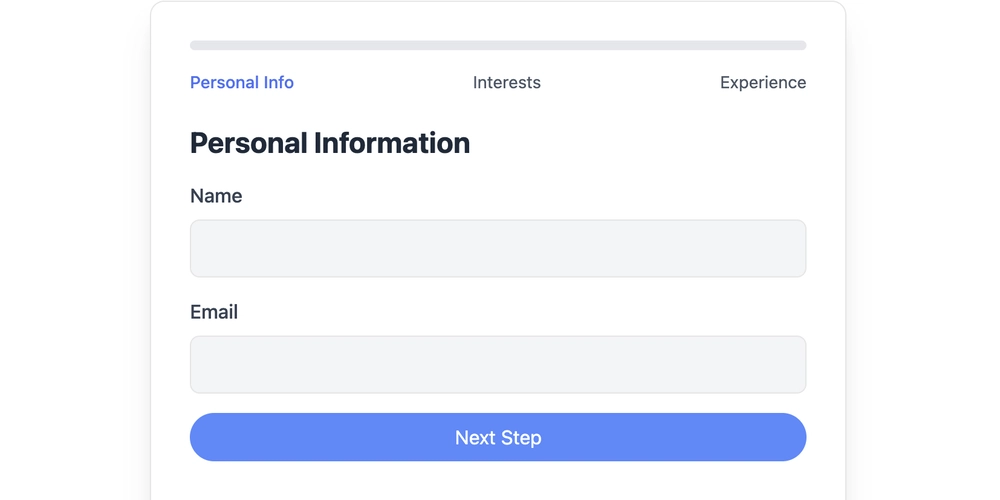

Getting Started with Google's A2A Protocol

Ready to implement A2A in your own agent systems? Here's how to start:

- Study the documentation: Read the official documentation to know more the A2A protocol.

- Develop your Agent Card: Create a JSON detailing your agent's abilities.

- Set Up A2A server endpoints: Incorporate the JSON-RPC methods required for the protocol.

- Test: Ensure your implementation with other systems runs accurately.

The Future of AI is Collaborative

Agent-to-Agent (A2A) protocol solves a major roadblock in AI: making different AI agents work together. With support from industry giants, A2A is poised to become a key enabler of AI collaboration. By facilitating seamless communication between diverse AI systems, A2A empowers them to surpass individual capabilities, heralding a new era of collaborative AI excellence.

Just like the internet connected computers, A2A connects AI agents, regardless of their origin, opening the door for a future where AI systems collaborate to solve complex problems and enhance our lives.