Google's A2A Protocol: Connect AI Agents for Seamless Collaboration

Ready to explore the future of AI collaboration? Google's Agent-to-Agent (A2A) protocol is revolutionizing how AI agents communicate and work together. Supported by industry giants like LangChain and Infosys, A2A provides a standardized framework for AI systems built on different platforms to interact seamlessly. Imagine AI agents with diverse specializations collaborating to solve complex problems. Let's dive into how A2A achieves this groundbreaking interoperability.

A2A vs. MCP: What's the Difference?

Confused about A2A and MCP (Model Context Protocol)? They actually complement each other!

- MCP: Connects Large Language Models (LLMs) like GPT to external data sources like APIs, databases, and file systems.

- A2A: Standardizes communication between AI agents, enabling them to exchange information and collaborate on tasks.

Think of MCP as giving AI agents access to knowledge, while A2A provides the language they use to talk to each other. Developing agents with A2A ensures they can easily communicate, regardless of their underlying framework.

5 Key Principles of Google's Agent-to-Agent Protocol

A2A isn't just a concept; it's a practical framework built on key principles:

1. Agent Card: Your AI's Digital Business Card

Each agent has an "Agent Card" – a digital profile outlining its capabilities. It's like a digital business card containing info on what the agent can process such as HTTP GET /.well-known/agent.json.

- Agents exchange cards to understand each other's skills and services.

- This allows for quick identification of appropriate collaborators.

Here’s an example of an Agent Card for a Bhagavad Gita chatbot:

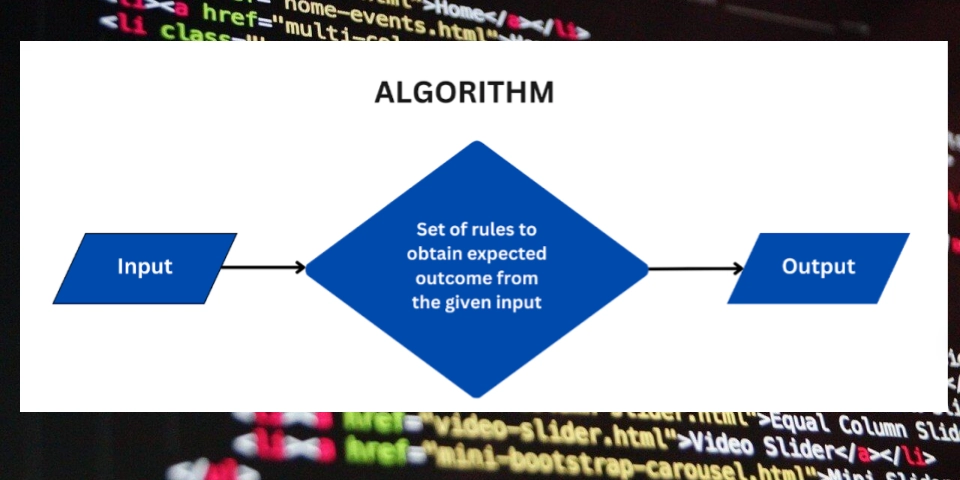

2. Task-Oriented Architecture: Breaking Down the Work

A2A uses a task-oriented approach. A "Task" is a request sent to an agent by another agent. The remote agent processes the request and sends a response back.

- An agent can act as both a client (requesting a task) and a server (processing a task).

- Tasks move through defined states like Submitted, Working, Completed, or Failed.

3. Data Exchange: Talking in Multiple Languages

A2A supports various data types, including:

- Plain text

- Structured JSON

- Files (inline or via URI)

This adaptability allows agents to interact in a wide variety of scenarios.

4. Universal Interoperability: Agents Without Borders

A2A enables agents built with different frameworks (like LangGraph, AutoGen, and CrewAI) to communicate easily. This interoperability is crucial for complex AI ecosystems. Regardless of the underlying frameworks A2A support secure agents to agents communication.

5. Security and Flexibility: Safe and Adaptable

A2A offers:

- Secure authentication schemes

- Request-response patterns

- Streaming via Server-Sent Events (SSE)

- Push notifications via webhooks

This blend of security and adaptability makes A2A a robust solution.

How A2A Works: Peeking Under the Hood

Let's look at the core components and communication flow of Google's A2A agent communication:

Core Components Explained

- Agent Card: The agent's public profile.

- A2A Server: The agent application that exposes HTTP endpoints implementing the A2A protocol methods.

- A2A Client: Any application or agent that uses the A2A server's services.

Messages and Data Structures

- Task: The central unit of work (identified by a unique ID).

- Includes status, optional artifacts (outputs), conversation history, and metadata.

- Message: A turn in communication within a Task.

- Includes the role (user or agent) and content (parts).

- Part: The fundamental unit of content.

- Can be TextPart, FilePart, or DataPart (JSON).

- Artifact: Output generated during task execution.

Communication Flow – A Step-by-Step Look

- Discovery: The client agent retrieves the server agent's AgentCard.

- Initiation: The client generates a unique Task ID and sends an initial message using tasks/send.

- Processing: The server agent handles the request. It could be synchronously or with streaming updates using tasks/sendSubscribe.

- Interaction: Supports multi-turn conversations.

- Completion: The task reaches a terminal state (completed, failed, or canceled).The task can be cancelled using tasks/cancel

Standard JSON-RPC Methods

A2A relies on standard JSON-RPC 2.0 methods for communication:

- tasks/send: Initiates or continues a task (single response).

- tasks/sendSubscribe: Initiates a task with streaming updates.

- tasks/get: Retrieves the state of a specific task.

- tasks/cancel: Requests cancellation of an ongoing task.

- tasks/pushNotification/set: Configures a webhook for receiving updates.

- tasks/pushNotification/get: Retrieves notification settings.

- tasks/resubscribe: Reconnects to an existing task's stream.

A2A in Action: Real-World Examples

Imagine the possibilities with seamless agent collaboration:

- Multi-Agent Collaboration: A personal assistant collaborates with a research agent to gather information, or a coding agent requests help from a visualization agent.

- Agent Marketplaces: Specialized agents offer services through standardized interfaces, leading to vibrant AI ecosystems.

- Enhanced User Experiences: Users benefit from AI systems that leverage specialized knowledge and capabilities as needed.

Getting Started with A2A

Ready to implement Google's A2A agent communication? Follow these steps:

- Learn the Protocol: Review the official A2A documentation and examples.

- Implement an Agent Card: Create a JSON file describing your agent's capabilities.

- Set Up A2A Server Endpoints: Implement the required JSON-RPC methods.

- Test: Ensure your implementation works with other A2A-compatible agents.

The Future of AI is Collaborative

The Agent-to-Agent protocol marks a major step forward in AI. By enabling effective communication between diverse AI systems, A2A unlocks possibilities previously out of reach. With support from industry leaders, expect rapid adoption and expansion of A2A. This protocol addresses a core challenge: interoperability between agents on different platforms. It's like a universal passport for AI agents, simplifying connections and collaborations. As AI becomes increasingly specialized, A2A will be essential for building complex, powerful AI ecosystems. The future isn't just about individual AI models, it is also about enabling AI agent to agent communication to achieve bigger goals together.

Conclusion: Embrace the A2A Revolution

The future isn't just about individual models becoming more powerful—it's about enabling collaboration between diverse AI systems to achieve greater things together than they could alone. Implementing the A2A protocol will enable the next generation of AI agent communication that is available through Google.

![Is [X Service] Down? Reduce Support Tickets with an IT Status Page](https://media2.dev.to/dynamic/image/width=1000,height=500,fit=cover,gravity=auto,format=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2F4711peq7blgvbjijnfb0.jpg)