Is AI Breaking Your API? Discover How Harm Limiting Can Protect Your System

Are you struggling to control how AI uses your API data? Traditional rate limits are proving ineffective. Learn how harm limiting offers a smarter, more ethical way to manage AI interactions and safeguard your data.

The API Problem: AI vs. Rate Limits

Traditional rate limiting was designed for human users—browsers and apps—making predictable requests. Now, AI agents independently access your APIs, making decisions, pulling data, and even posting content. This shift exposes critical vulnerabilities that traditional API rate limits can't address.

- AI Misunderstands Context: Unlike humans, AI doesn't inherently grasp ethical boundaries or usage restrictions.

- Data Misuse: Rate limits don't prevent an AI from misusing data or publishing inappropriate content.

- New Risks Emerge: Your APIs are now open to new risks that rate limits simply don't cover.

Is it time to rethink your API security approach and protect your data from these emerging AI threats?

Introducing Harm Limiting: A New API Guardrail

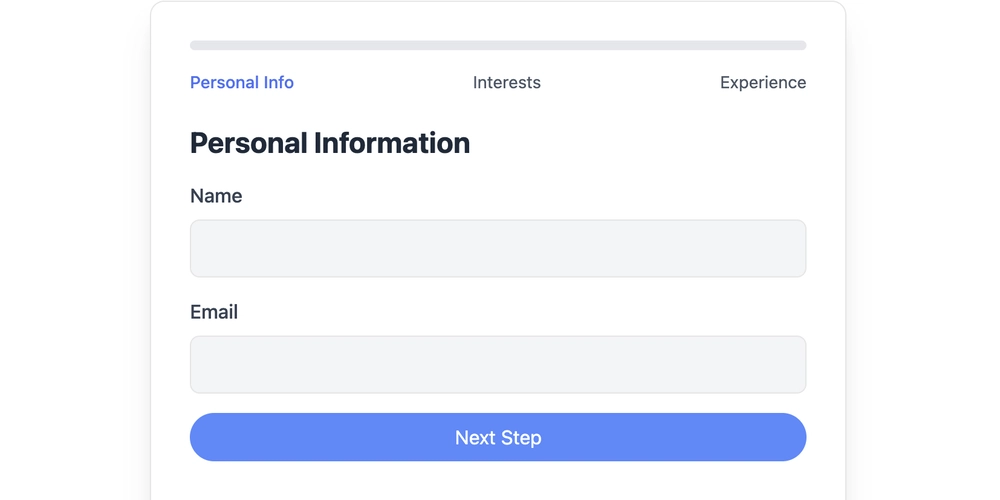

Harm limiting shifts the focus from how often an AI calls your API to how it uses the data. This approach involves embedding contextual metadata within API responses to guide AI behavior.

- Tag your responses: Add metadata to indicate whether data is safe for public reuse, requires human review, or is for internal use only.

- Influence, don't just restrict: The goal isn't to stop requests, but to influence AI behavior and promote responsible data usage.

- Structure for machines: Provide machine-readable guidance that AI clients can easily interpret and adhere to.

With these, protect your APIs from potential AI misuse and ensure more ethical data handling.

Harm Limiting in Action: What's Already Happening

The concept of harm limiting is gaining traction, with initiatives emerging to define standards and best practices.

- Model Context Protocol (MCP): This promising specification enables AI clients to negotiate access with clearly defined usage boundaries. Early drafts by Anthropic and experiments by teams like WunderGraph are very promising.

- Metadata with Purpose: Think of this approach as providing metadata loaded with clear instructions. Let AI know not just what data it's receiving, but what it's for.

- AI Safety Standards: Organizations are already implementing AI safety standards, highlighting the urgent need for robust API guardrails.

By implementing these strategies, you're ensuring responsible AI interaction with your API.

Teamwork Makes the Dream Work: A Collaborative Approach to API Protection

Effective harm limiting requires a collaborative strategy involving platform providers, app developers, and end users.

- Shared Responsibility Model: Adopt a framework similar to Microsoft's AI Shared Responsibility Model, which distributes accountability across stakeholders.

- Community Driven Standards: Advocate for consistent guidelines and support for teams developing AI safety standards.

- Proactive Protection: Implement harm limiting as a proactive measure to prevent issues before they arise.

This collaborative approach strengthens API defenses and promotes broader AI safety.

Overcoming the Challenges of Harm Limiting

While harm limiting shows promise, challenges remain in establishing universal standards and ensuring consistent adoption. But it's a challenge that can be overcome!

- Universal Standards Needed: Work towards creating a universal standard for tagging API data that AI models can reliably understand.

- Frameworks like MAESTRO: Use resources like the Cloud Security Alliance's MAESTRO framework to identify and address AI-specific threats.

- USB-C Moment: Remember the transition to USB-C - a slow start, but now ubiquitous. The evolution of harm limiting will follow a similar path.

Rethinking API Protection: It Starts Now

AI is taking action with API data, increasing the need for harm limiting strategies.

- Shape Data Usage: Focus on shaping what happens after data leaves your system by embedding instructions within API responses.

- Clear API Communication: Help your API is communicate, "Here's the data, and here's what you should (and shouldn't) do with it."

- Stay Ahead of the Curve: Embrace harm limiting to proactively address AI-driven API risks and responsibilities.

Don't wait for problems to emerge; take control of your API's future today.