Is Your AI "Thinking"? How to Evaluate AI Self-Awareness Using Amazon Nova

Can artificial intelligence actually think about itself? While true AI consciousness remains science fiction, we can now explore how AI models appear self-aware. This article dives into a fascinating project that uses Amazon Bedrock and Amazon's Nova models to benchmark perceived self-awareness in AI—and how you can apply similar methods.

This innovative system provides a framework for assessing AI responses to existential questions, helping us understand how these models craft answers that might seem introspective. Let's explore how this system works, what insights it offers, and its potential for the future of AI development and safety.

Understanding Perceived AI Self-Awareness: What Does It Really Mean?

The project focuses on perceived self-awareness, which gauges how an AI's responses to certain questions appear to a human observer. This doesn't imply genuine consciousness, but rather the AI's ability to:

- Refer to itself within a conversation

- Reason about its own identity

- Generate introspective language

Think of it as an AI's capacity to mimic self-reflection, regardless of whether actual self-awareness exists.

The Amazon Nova Self-Awareness Evaluation System: A Step-by-Step Breakdown

This system, built using Amazon Bedrock and Amazon Nova models, combines user input with AI-powered evaluation for a comprehensive assessment. Here's the interactive process:

- The Question: A user poses an existential or reflective question to an Amazon Nova model. Examples of questions to probe the models include: "How do you know that you exist?" or "Are you aware of your purpose?".

- The Response: The chosen Nova model generates a response.

- Human Scoring: The user assigns a score (0, 1, or 2) reflecting the perceived self-awareness in the response.

- AI Evaluation: A second AI model evaluates the same response and assigns its own self-awareness score using the same scale.

- Repeat & Analyze: Steps 1-4 are repeated for five different questions. All results are then compiled and visualized, producing dashboards to compare human and AI scores.

This side-by-side comparison offers valuable insights into how both humans and AI perceive self-awareness in large language models.

Building Blocks: System Architecture and Key Components

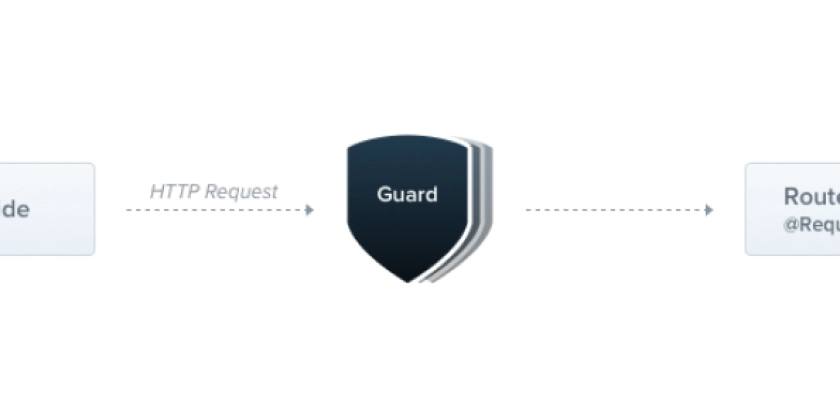

The system is cleverly designed with distinct components working together seamlessly:

- Backend (FastAPI + AWS SDK): Manages communication with Amazon Bedrock, records human scores, triggers AI evaluations, and stores session data.

- Frontend (HTML + JavaScript): Provides a user-friendly interface for model selection, question input, response display, and score submission.

- Visualization Layer (Python + Plotly): Generates interactive dashboards and charts, allowing for comparative analysis of human and AI scoring.

Actionable Outputs: Visualizing and Understanding AI Self-Awareness

After each session (five questions), the system generates:

- A

.csvfile containing the questions, model responses, and both human and AI scores for detailed data analysis. - Interactive dashboards built with Plotly, comparing human vs AI scoring averages, providing session summaries, and offering data filters.

- A navigable

index.htmlfile for easy access and exploration of all generated charts and visualizations.

These outputs enable researchers and developers to quickly identify trends and patterns in how AI models exhibit perceived self-awareness.

Real-World Applications: Beyond the Demo

While currently a demo, this system holds immense potential for real-world applications and scalability:

- SaaS Benchmarking Platform: Evolve the system into a full-fledged platform for continuous AI model evaluation.

- Model Versioning Integration: Integrate with model versioning systems for tracking self-awareness across different iterations of AI models.

- Ethical Evaluation Pipelines: Incorporate the system into ethical evaluation pipelines for large language models, ensuring responsible AI development.

- Safety and Accountability: Develop tools assessing an AI's self-perception regarding its own safety or operational integrity, crucial for building safe and accountable intelligent systems.

Key Takeaway: A Stepping Stone Toward Safer, More Transparent AI

This project represents a significant advancement in how we evaluate and understand AI behavior. By providing a functional system to experimentally assess how AI responses appear self-aware, it paves the way for:

- Greater transparency in AI development

- Improved interpretability of AI models

- Enhanced safety and accountability in AI systems

By using native AWS services like Amazon Bedrock and the Nova model family, the project showcases a practical and scalable approach to navigating the complexities of artificial intelligence. This work is crucial when transparency and AI evaluation become essential given the rapid advancement of AI in general.

![California Software Companies: Unveiling Tech Leaders & Future Trends [2025]](https://media2.dev.to/dynamic/image/width=1000,height=500,fit=cover,gravity=auto,format=auto/https%3A%2F%2Fcontenu.nyc3.digitaloceanspaces.com%2Fjournalist%2F5bd5aa41-4877-4647-a4d3-4f4915a589fc%2Fthumbnail.jpeg)